The following is a description of Wearable Wisdom (Pataranutaporn et al., 2020), a next generation intelligent software agent developed by a group of researchers from Massachusetts Institute of Technology (MIT) that includes the reputable Pattie Maes.

This description borrows heavily from (Pataranutaporn et al., 2020), sometimes using the words of the authors themselves for an accurate description.

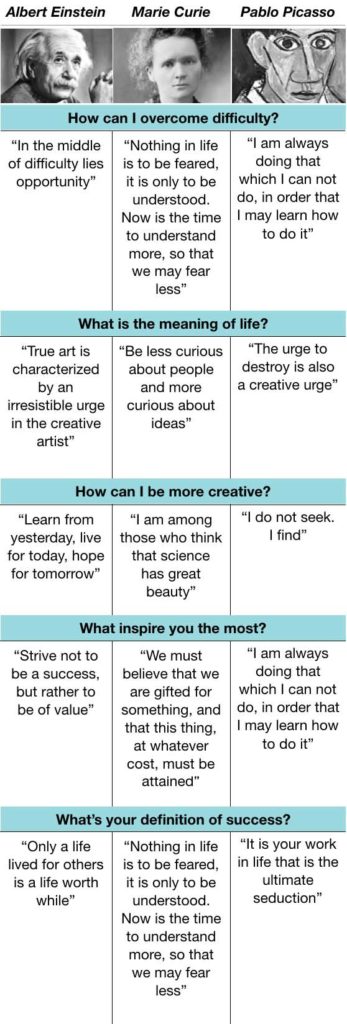

Wearable Wisdom, is “an intelligent, audio-based system for mediating wisdom and advice from mentors and personal heroes to a user. It does so by performing automated semantic analysis on the collected wisdom database and generating a simulated voice of a mentor sharing relevant wisdom and advice with the user” (Pataranutaporn et al., 2020, p. 1).

The researchers stated that their aim is to leverage state of the art wearables, Natural Language Processing (NLP), and intelligent agent systems to create technology that can provide the user with motivational feedback beyond factual information.

The ideas in Pataranutaporn et al. (2020) design of Wearable Wisdom, to provide just-in-time advice, real-time feedback and motivational wisdom to users, can be leverage to design a similar system to provide just-in-time advice and real-time feedback, as digital nudges, to students to get back on task when they are distracted by their smartphones in class.

System implementation

Below is a description of the system implementation in the researchers own words.

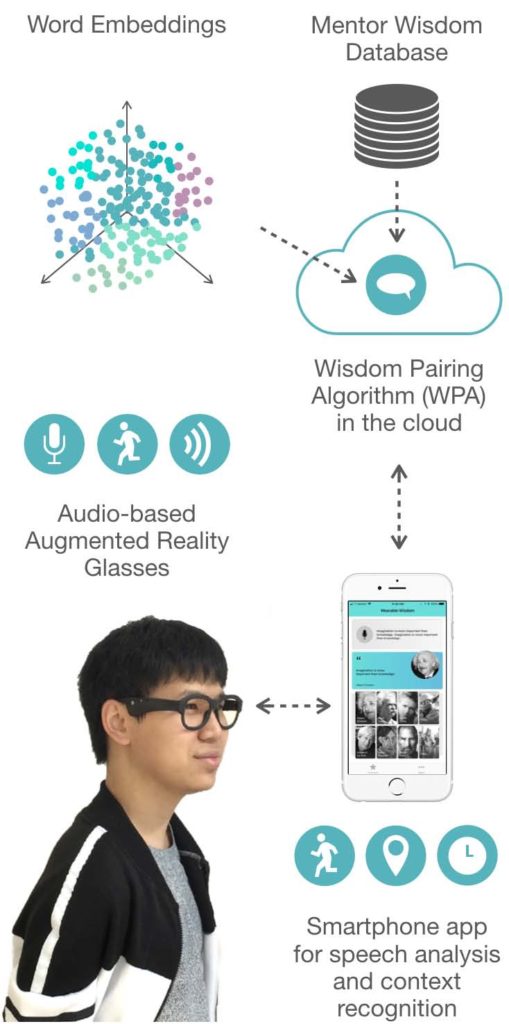

The system consists of a wearable audio I/O device, a smartphone capable of real-time utterance recognition and context detection, and a back-end infrastructure for storing the mentor profiles and wisdom, processing user input and providing responses.

We use an off-the-shelf, Bluetooth-enabled audio interface in a glasses form factor, namely the “Bose Frames”, as the Wearable Wisdom device. We chose glasses as they represent a socially acceptable form factor that users could wear continuously, thereby always having quick and convenient access to advice from mentors. These audio-based augmented reality glasses provide a private audio stream without blocking the ear canal, thus still allowing auditory awareness of the surroundings. They also contain an Inertial Measurement Unit (IMU), which allows the glasses to sense the physical activity of the wearer. The audio interface connects to the Wearable Wisdom mobile app, which receives, processes, and transmits back audio content.

We determine the location of the user using iOS CoreLocation framework, and the current date and time with the iOS Foundation framework. By means of the user’s location we determine whether the user is at home, at work, or at the gym. By means of the date and time, we deduce whether the user is in a productivity or recreational context. These two contextual features are used to infer which mentor’s advice would be most appropriate for the user’s context based on the mentor domain expertise recorded in the mentor profile.

We also gathered the accelerometer data obtained from the IMU embedded in the glasses and the phone. Using an off-the-shelf machine learning framework, we performed a preliminary assessment of this data. Given this, and previous work, IMU data can be used to further recognize the user activity such as sitting still, walking, exercising, eating, drinking, and more.

User interaction flow

Step 1: Begin interaction

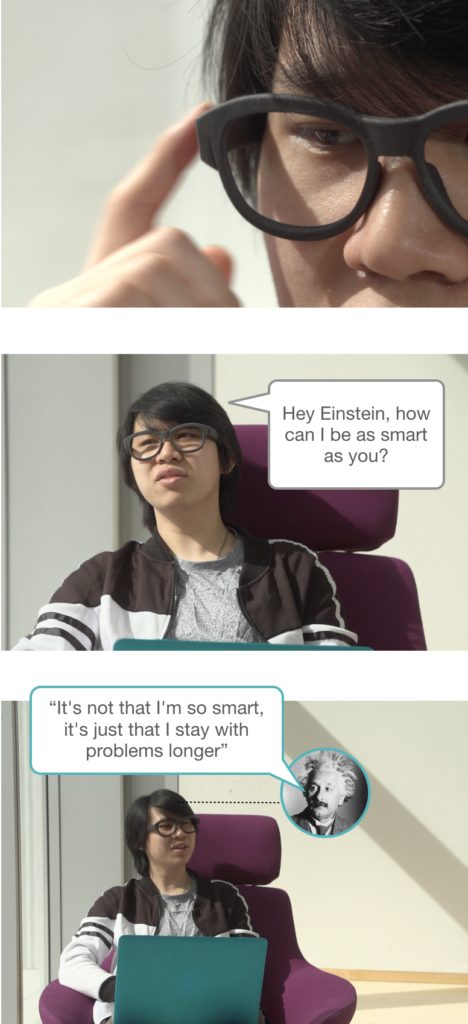

To ask for advice or wisdom, the user double-taps on the glasses frame and asks a question beginning with the mentor name such as “Hey Einstein, how can I be more creative?”

Step 2: Utterance Extraction

Once the double tap has been detected, the iOS Speech framework starts recognizing spoken words from live audio, without the need to store the user’s speech data. The speech recognizer is configured to use the microphone integrated in the glasses. When no words are detected for over 2 seconds, speech recognition stops.

Step 3: Wisdom Computation

The utterance and selected mentor are sent using a HTTP POST request from the iOS app to a Python server. The server runs the WPA to understand the content of the utterance, and then selects the most relevant quote within the wisdom database of the selected mentor. This quote is sent back as part of the POST request’s response.

Step 4 : Wisdom Delivery

Custom speech profiles for the initial set of mentors available in the Wearable Wisdom system were designed; speech profiles allow each wisdom quote to embody certain characteristics of the mentor such as their accent, gender, and age.